PII Masking Patterns for Customer-Facing Chatbots

The hard truth about PII in chatbots

You cannot stop users from sharing personal data. Warnings do not work. Terms of service do not work. Users will paste credit card numbers, medical records, and social security numbers into your chat widget because the input box is right there and they want help.

In our production data, 23% of customer service conversations contain at least one PII entity. Of those, 67% were volunteered by the user without prompting. The remaining 33% came after the chatbot asked for identifying information to process a request.

The question is not whether PII will enter your system. The question is what happens to it when it does.

Most teams approach this wrong. They either strip PII entirely and break the conversation, or they pass everything through and hope their vendor agreement covers them. Neither works at scale. Strip too aggressively and your bot becomes useless for anything involving user data. Pass everything through and you inherit every compliance obligation your LLM vendor triggers.

Why vendor retention policies create compliance surface area

LLM vendors retain data for safety monitoring, abuse detection, and model improvement. The specifics vary and they change frequently:

| Vendor | Default Retention | ZDR Available | Notes |

|---|---|---|---|

| OpenAI API | 30 days | Yes (eligible endpoints) | Enterprise gets workspace controls |

| Anthropic Claude | 30 days | Yes | Policy-flagged content may be retained up to 2 years |

| Google Vertex AI | No training by default | N/A | Logging policies vary by endpoint |

| AWS Bedrock | No retention by default | N/A | CloudWatch logging often enabled separately |

This retention creates compliance surface area. Under GDPR, data transfers outside the EU require adequacy decisions or Standard Contractual Clauses. Under Singapore's PDPA, the Transfer Limitation Obligation requires comparable protection for overseas recipients. Under CCPA, you need to disclose what you collect and who you share it with.

Our position: The only way to stay compliant by default is to prevent PII from leaving your perimeter in the first place. Everything else is a mitigation, not a solution.

The seven patterns compared

We have tested these patterns across healthcare, financial services, and e-commerce deployments. Each has tradeoffs. Pick based on your constraints, not your preferences.

| Pattern | Latency | Context Loss | Best For |

|---|---|---|---|

| Hard strip | ~2ms | Total | Anonymous FAQs only |

| Typed placeholder | ~8ms | None | Most use cases (default choice) |

| Two-stage detection | 3-50ms | None | High-volume, latency-sensitive |

| Context-aware classification | ~25ms | Selective | Regulated industries with fine-grained rules |

| Token vaulting | ~15ms | Partial | Healthcare, finance (PII never leaves infra) |

| Bidirectional masking | ~12ms | None | Multi-turn conversations |

| Differential privacy | ~5ms | Aggregated | Analytics and reporting |

Pattern 1: Hard strip

Remove PII entirely before the message reaches the LLM. The simplest approach, and often the worst.

When to use: Only when PII is never relevant to the task and you need maximum simplicity. Think anonymous FAQ bots where users should not share personal data at all.

Problems: Destroys context. The model sees broken sentences and cannot reason about the missing information. If a user asks "send the confirmation to my email," the model has no idea what email they mean. Resolution rate drops 40% compared to placeholder masking.

Pattern 2: Typed placeholder masking

Replace PII with structured tokens that preserve semantic meaning. This is the sweet spot for most use cases.

The approach: detect sensitive spans like emails, phone numbers, and names, then replace them with typed placeholders such as <EMAIL_1>, <PHONE_1>, <PERSON_1>. Store the mapping server-side. After the LLM responds, restore the original values.

// Example transformation

Input: "My email is jane@example.com and my SSN is 123-45-6789"

Masked: "My email is <EMAIL_1> and my SSN is <SSN_1>"

Map: { EMAIL_1: "jane@example.com", SSN_1: "123-45-6789" }

// LLM sees only the masked version

// Response: "I've noted <EMAIL_1> for your account"

// Final: "I've noted jane@example.com for your account"When to use: Default choice for customer service, support, and general Q&A. Preserves conversational flow while removing actual sensitive data from vendor calls.

Critical detail: You must add instructions to your system prompt telling the model to preserve tokens exactly as written. Without this, models will sometimes expand or modify the placeholders. Add something like: "Preserve all bracketed tokens (e.g., <EMAIL_1>) exactly as written. Do not expand, explain, or modify them."

Pattern 3: Two-stage detection with fast path

Most messages contain no PII. Running full NER on every message wastes compute and adds latency. A two-stage approach uses a cheap boolean classifier first.

Stage 1: Fast boolean check (under 5ms). Does this message likely contain PII? Uses lightweight pattern matching and a small classifier. If no, skip to LLM immediately.

Stage 2: Full NER extraction (50-100ms). Only runs when PII is detected. Extract and mask all sensitive entities using a larger model.

When to use: High-volume systems where latency matters. The fast path handles "hi", "thanks", and most simple queries without invoking the heavy extractor.

Our numbers: The fast detector adds 3ms p50. The full extractor adds 47ms p50. With 94% of messages taking the fast path (no PII detected), average overhead is 5.6ms. Compare to 47ms if you run full extraction on everything.

Pattern 4: Context-aware entity classification

Not all entities are equally sensitive. A first name in a greeting is different from a first name in a medical record. Context-aware classification lets you mask selectively.

The classifier considers:

- Entity type (SSN is always sensitive, first name varies)

- Surrounding context (medical terms nearby elevate sensitivity)

- Conversation topic (benefits discussion vs. general inquiry)

- Regulatory domain configured for the chatbot

When to use: When you need fine-grained control and can tolerate complexity. Useful in regulated industries where some data requires stronger protection than others.

Tradeoff: More configuration, more edge cases, more testing. Only worth it if uniform masking is too aggressive for your use case.

Pattern 5: Token vaulting with reference IDs

For high-security environments, store PII in a separate vault and pass only reference IDs through the LLM pipeline.

The LLM only ever sees something like "Contact the customer at ref:a1b2c3d4". The actual PII lives in your encrypted vault, never leaves your infrastructure.

When to use: Healthcare, financial services, or any environment where PII must never touch third-party infrastructure, even in masked form.

Architecture notes:

- The vault must be regionally deployed to satisfy data residency requirements

- Use a separate encryption key per tenant for multi-tenant systems

- Set TTLs on vault entries (we default to 24 hours)

- Log access patterns for audit, not actual values

Pattern 6: Bidirectional masking with session context

In multi-turn conversations, you need consistent masking across the entire session. The same email should get the same placeholder every time it appears.

This pattern maintains a session-scoped entity registry. When PII is detected, the system checks if it matches a previously seen entity. If yes, reuse the same placeholder. If no, assign a new one.

When to use: Any multi-turn conversation where users reference the same PII multiple times. Essential for natural dialogue.

Example flow:

Turn 1: "Email me at jane@example.com" → <EMAIL_1>

Turn 3: "Actually use jane@example.com" → <EMAIL_1> (same reference)

Turn 5: "CC my work email bob@company.com" → <EMAIL_2> (new entity)Pattern 7: Differential privacy for analytics

When you need to analyze conversation patterns without exposing individual PII, apply differential privacy to aggregated metrics.

This pattern adds calibrated noise to aggregate statistics. You can report "23% of conversations mention email addresses" without storing which conversations or which addresses.

When to use: Analytics dashboards, A/B testing, and any reporting where you need aggregate insights without individual exposure.

The HoverBot architecture

We combine patterns 2, 3, and 6 into a unified pipeline:

- Fast gate: Boolean PII detector runs on every message (3ms p50). No PII detected? Skip to LLM.

- Full extraction: NER model identifies and classifies entities (47ms p50). Only runs when gate fires.

- Session-aware masking: Consistent placeholders across the conversation using session registry.

- LLM call: Masked text goes to the model with placeholder preservation instructions.

- Server-side unmask: Placeholders restored to original values before user sees the response.

The mapping never leaves our infrastructure. We pin processing to regional deployments for data residency. Where we must call external LLMs, we enable vendor ZDR options when available.

Detection accuracy: what actually matters

Most PII handling advice focuses on detection accuracy. That is the wrong optimization target. In production, you care about impact, not precision scores.

| Error Type | Impact with ZDR | Impact without ZDR | Mitigation |

|---|---|---|---|

| False negative (PII missed) | Compliance note | Potential breach | Enable ZDR, tune recall |

| False positive (non-PII masked) | Minor UX issue | Minor UX issue | Tune precision, add exceptions |

| Entity type mismatch | Wrong placeholder | Wrong placeholder | Usually harmless, monitor |

Our recommendation: Tune for the failure mode that hurts most. If you have ZDR enabled, optimize for precision (reduce false positives that annoy users). If you cannot enable ZDR, optimize for recall (catch everything, accept some over-masking).

Implementation checklist

- ☐ Choose masking pattern based on compliance requirements

- ☐ Deploy PII detection model in same region as user data

- ☐ Add placeholder preservation instructions to system prompts

- ☐ Implement session-scoped mapping for multi-turn conversations

- ☐ Set TTL on all PII mappings (24 hours is a reasonable default)

- ☐ Log masking decisions for audit without logging actual PII values

- ☐ Enable vendor ZDR where available

- ☐ Test with adversarial inputs (encoded PII, partial matches, edge cases)

- ☐ Monitor false positive rate and adjust thresholds based on user feedback

- ☐ Document your pattern choice and the reasoning for auditors

The opinionated take

The industry treats PII protection as a compliance checkbox. Run a scanner, check the box, move on. This is backwards.

PII protection is an architectural decision that shapes your entire chatbot pipeline. The pattern you choose affects latency, conversation quality, debugging complexity, and incident response. Picking the wrong pattern creates problems you will live with for years.

Three principles we have learned the hard way:

- Default to typed placeholders. Hard stripping sounds safe but destroys conversations. Placeholder masking preserves context with minimal overhead.

- Build for multi-turn from day one. Single-message masking breaks when the same user mentions their email in turn 1 and references "my email" in turn 5. Session-aware masking is not optional.

- Vendor ZDR is not a substitute for client-side protection. ZDR reduces your exposure but does not eliminate it. The safest PII is PII that never leaves your perimeter.

A privacy banner is not a privacy control. If you are shipping a customer-facing chatbot, build the PII layer from day one. The patterns exist. The tools work. The only question is whether you implement them before or after your first incident.

Share this article

Related Articles

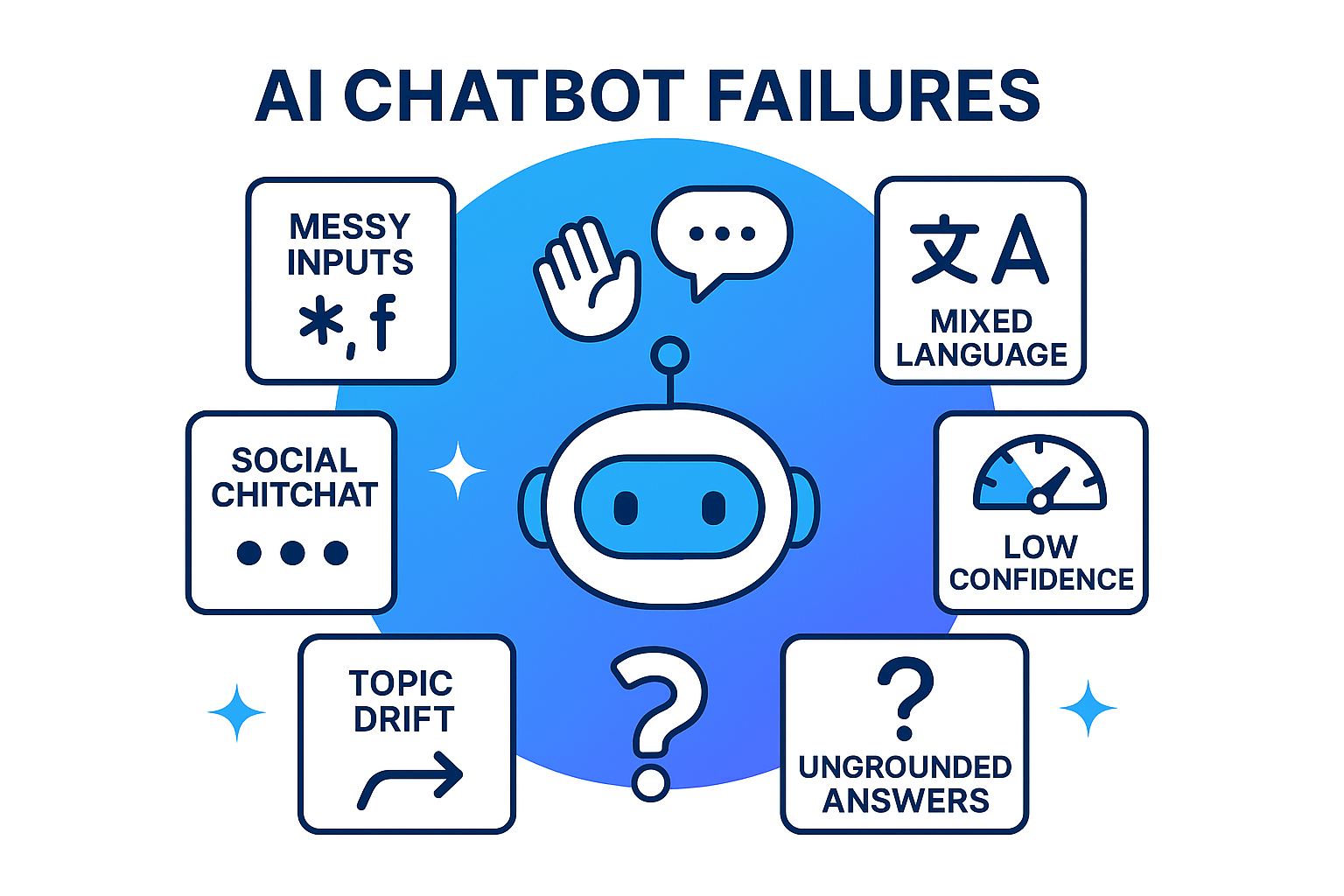

Why Building a Customer Facing Chatbot Is Hard (and How to Fix It)

The hardest part is not the model, it is the human layer. Learn how to detect social intent, run guardrails, ground answers with retrieval, and use confidence gates to build chatbots that sound human, not robotic.

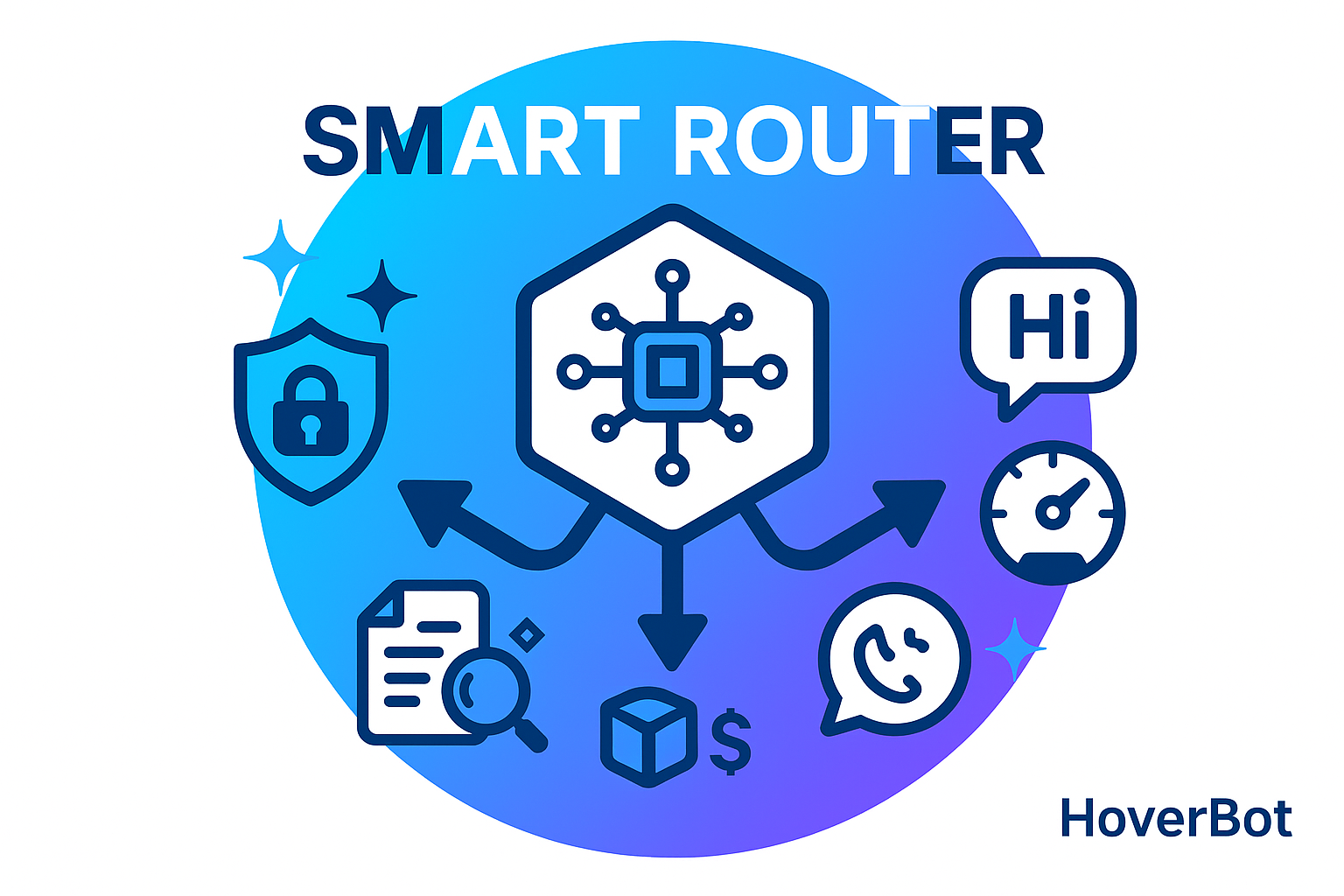

Routing Beats Bigger Models: A Production Architecture

GPT-4o costs 15x more than GPT-4o-mini. Claude Opus costs 30x more than Haiku. The question is not which model to use. The question is which model to use for each request. A smart router cuts cost 70% while improving quality.