Why Building a Customer Facing Chatbot Is Hard (and How to Fix It)

TL;DR

The hardest part is not the model, it is the human layer. Detect social intent up front, run guardrails before generation, ground answers with retrieval, and use confidence gates to choose whether to answer, clarify, or hand off to a person. Do this and your bot sounds human, not robotic.

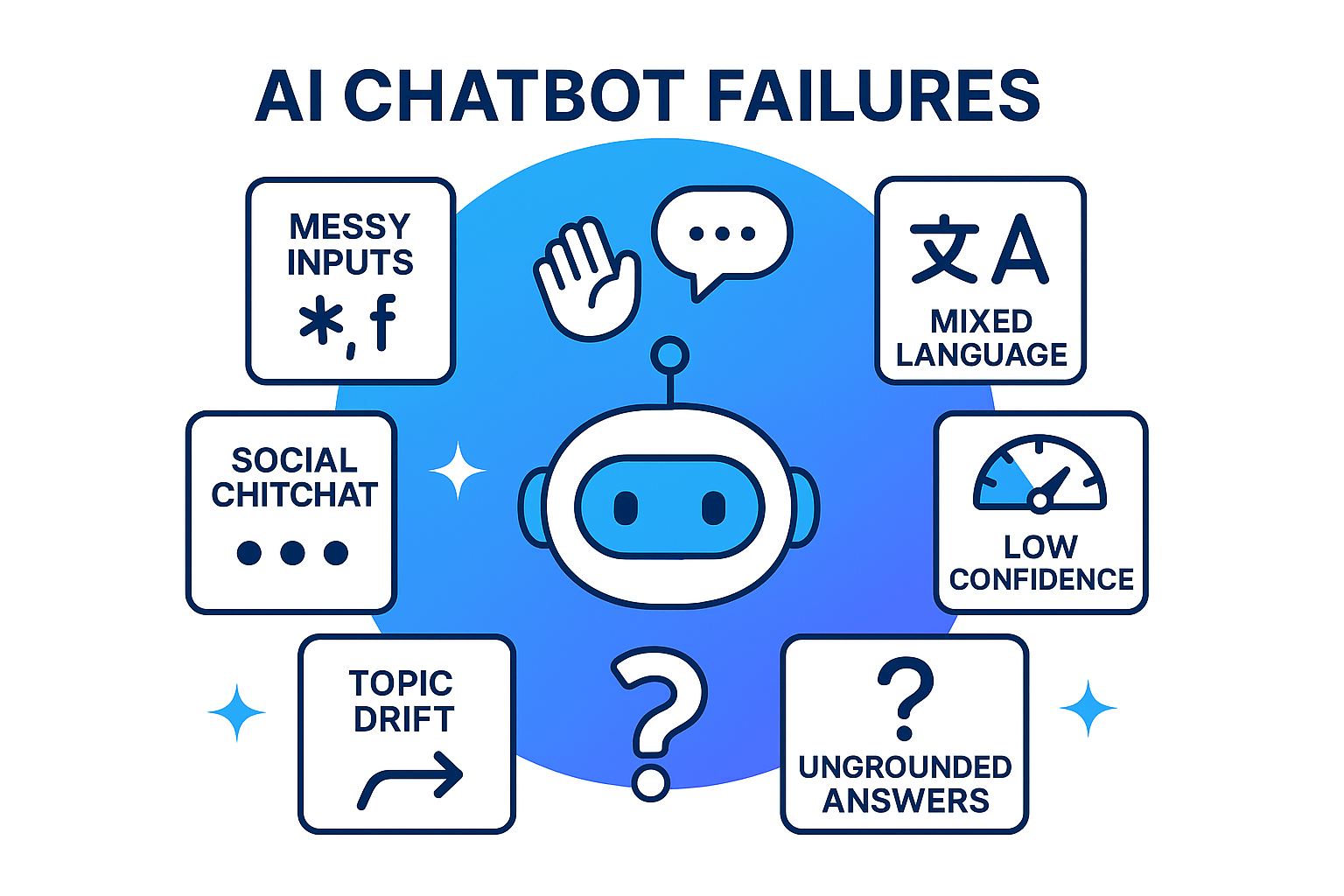

The messy reality of customer conversations

People do not speak in tidy FAQ titles. They mix greetings with questions ("hi there pricing?"), toss in quick thanks, vent, or send noise like "hfdf" or 🙏👏🙂. They switch topics mid-thread and often write in a different language than your knowledge base. If you push all of this through retrieval, social signals dilute queries and raise the odds of a wrong answer. Treat the social layer first, then handle the real question.

Five hard problems (and fixes)

1. Greeting pollution

"hi pricing?" should not be indexed as just "hi."

Fix: Detect greeting-only vs greeting-with-content, strip greetings before retrieval, then add a warm opener back into the final reply.

2. Social-only turns (thanks, farewell, praise, frustration, small talk)

A lone "thanks!" should not trigger a model call.

Fix: Fast-path social signals with micro-acknowledgements; if content is present, add a short acknowledgement and answer the question.

3. Low-signal noise

Inputs like "hfdf" waste tokens and return noise.

Fix: Detect noise and reply with a gentle nudge or suggestion chips, no model call required.

4. Multilingual inputs

Users type in Spanish while your knowledge base is English.

Fix: Detect language, translate the KB answer to the user's language, and keep clarifying questions in the user's language.

5. Grounding, confidence, and failing well

Bots often answer confidently on weak evidence, or they shut down with "sorry, I can't help."

Fix: Use hybrid retrieval (dense plus keyword), rerank, and compute a confidence score. High confidence: answer with citations. Medium: ask one clarifier. Low: say "don't know" and offer next steps. If confidence stays low or the topic is sensitive, hand off to a human.

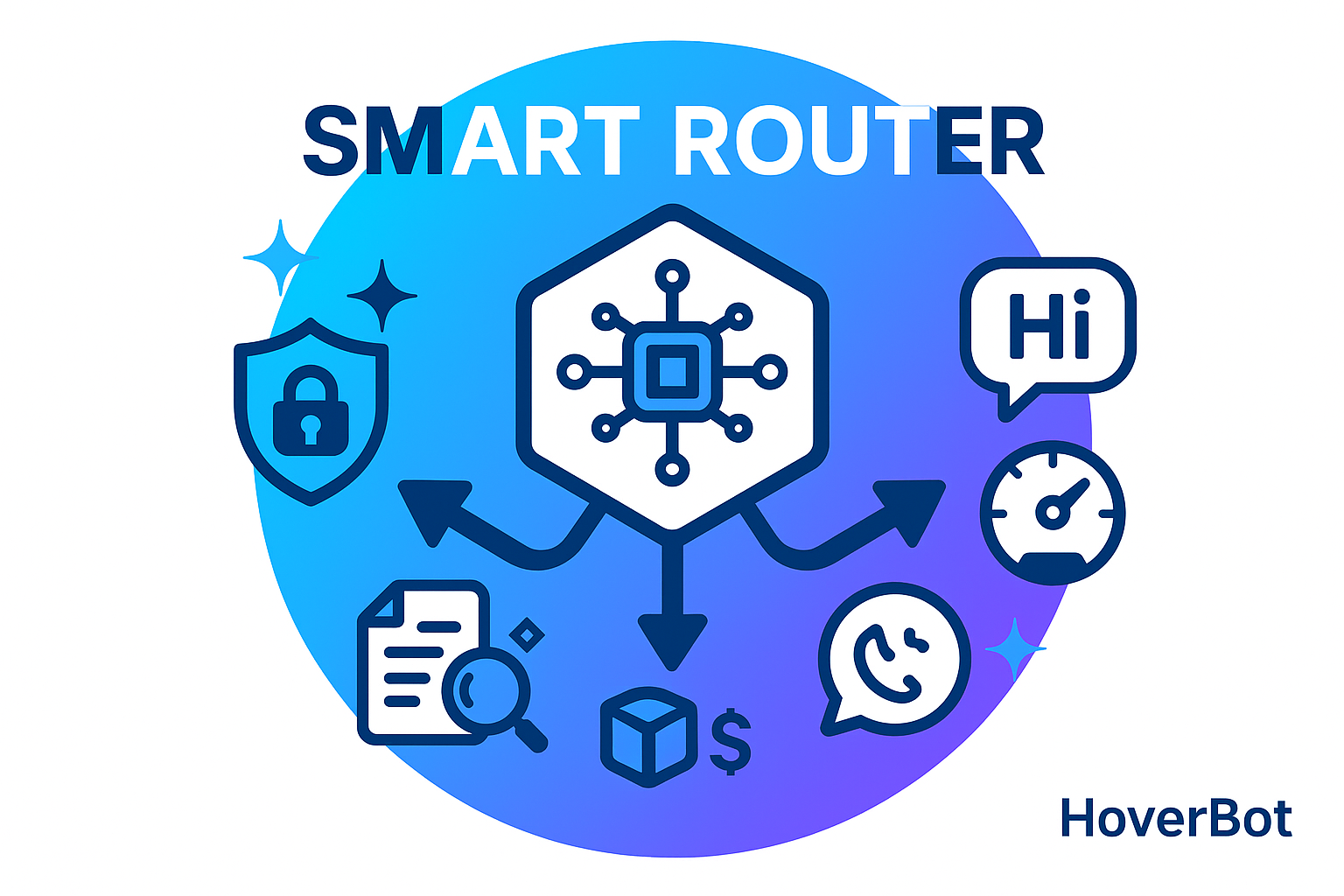

Guardrails before the model

Strong chatbots decide what is allowed before the model runs. Filter abuse and policy-restricted topics, scope-gate intents, and enforce evidence rules so no answer is given without a usable source. Validate outputs to a simple shape: answer, sources, confidence, next step. These controls keep responses safe, predictable, and easy to trust.

Human handoff that feels natural

Escalation should feel like help, not failure. Trigger a handoff on sensitive topics, repeated frustration, or two low-confidence turns in a row, and always on explicit requests to "talk to a person." Give the agent a one-line summary, a compact transcript, the last user question, and the top candidate sources. Let the bot keep listening, suggest next actions to the agent, and then resume smoothly once the issue is resolved.

HoverBot: our approach

At HoverBot, the social layer never touches retrieval. Social-only turns are handled instantly with lightweight logic. Real questions flow through hybrid retrieval, reranking, and a confidence gate that blends retrieval scores with recency and source authority. We only answer when at least one high-score citation is available; otherwise we ask a clarifier or hand off. Agents receive a context-rich summary so they can respond quickly, and when they resolve the issue the bot picks up the thread without an awkward restart.

The result is a conversation that is fast and trustworthy: efficient when AI is enough, empathetic when humans are needed.

Final thought

The smartest chatbots are not the flashiest models, they are the systems that reflect how people really talk: messy, social, and unpredictable. Handle greetings and small talk outside retrieval, ground answers with evidence, set boundaries before generation, and always leave a path to a human. Do this and the model feels brilliant not because it is perfect, but because the experience is.

Share this article

Related Articles

PII Masking Patterns for Customer-Facing Chatbots

Seven production-tested patterns for handling personal data in LLM chatbots. Includes architecture diagrams, latency benchmarks, and the tradeoffs between stripping, masking, and vaulting PII.

Routing Beats Bigger Models: A Production Architecture

GPT-4o costs 15x more than GPT-4o-mini. Claude Opus costs 30x more than Haiku. The question is not which model to use. The question is which model to use for each request. A smart router cuts cost 70% while improving quality.