The Agent Layer for Chat Widgets: How to Stay Useful in an Agentic Web

That matters for chat widgets because they sit at the intersection of three things agents need: context, intent, and execution. A widget can answer questions, but it can also route to live systems, trigger workflows, and enforce policy. In an agentic web, that makes the widget less like a support bubble and more like a site's safest control surface.

We already wrote about the agentic web arriving. This is the practical follow up: what to change in your chatbot widget so it works with agents instead of competing with them, and so advanced tasks can be completed without fragile UI scraping.

Why agents struggle with normal widgets

Most chat widgets were built for humans who will read an answer and then click around. Agents behave differently. They arrive with a job in mind: check stock, compare plans, book a demo, start a return, open a ticket. If the site does not offer a clean path, the agent will guess by reading HTML, clicking buttons, and trying form flows like a user would.

That approach is brittle. Websites change. A/B tests move buttons. Product details are split across tabs, tooltips, PDFs, and dynamic components. Even when the agent is correct, you cannot easily audit why it made a decision or what data it used.

There is also a safety issue. In agentic browsing, the agent is constantly exposed to untrusted content. If your only interface is "read the page and act," you increase the odds of an agent being misled by misleading page text, stale UI, or adversarial instructions embedded in content.

The fix is not to make the agent smarter. The fix is to give it a safer interface.

The agent layer, explained simply

The agent layer is a small, practical upgrade to the widget. It does not require new standards, and it does not require publishing secrets. It just makes three things explicit.

First, the widget needs to declare what it can do and what rules it follows. Agents should not have to infer capability by trial and error. They should be able to discover, quickly, whether the widget supports tasks like creating a ticket or scheduling a demo, what languages it supports, and what actions require confirmation.

Second, the widget should respond with structure, not only prose. Humans want a paragraph. Agents need the facts separated from the explanation: key entities found, confidence signals, suggested next steps, and whether a sensitive action requires user confirmation.

Third, the widget should offer controlled actions instead of encouraging UI automation. When something must be done—a ticket created, a booking scheduled, an inventory query run—it should happen through an approved action path that enforces permissions, privacy, and auditing on the server.

That is the agent layer in one sentence: discoverable capabilities, structured answers, controlled actions.

What this enables for real chatbot use cases

Once the widget becomes agent ready, you unlock workflows that are hard to do reliably through page text alone.

A common example is product support. The user asks about a warranty edge case, a compatibility rule, or a policy detail. The page rarely contains the full answer. A RAG-powered widget can answer from documentation, but the agent also needs to know what to do next. A structured response can suggest a safe next step: offer to open a ticket, ask for confirmation, and collect only the minimum details.

Another example is inventory and pricing. Agents scraping the UI can misread availability labels and miss the difference between "in stock at this site" and "ships in three days." A widget with a controlled inventory action can return live results, scoped correctly to tenant and site, with a clear explanation for the user.

A third example is lead capture and scheduling. Agents can fill forms, but it is error prone and often breaks. A controlled booking action gives you a stable flow, consistent validation, and clean attribution in analytics.

In each case, the agent gets a reliable interface. The business gets fewer broken flows and more traceability.

How this maps to HoverBot's existing capabilities

HoverBot already has the internal parts that make an agent layer valuable.

- It can answer using RAG knowledge bases built from files, text, and URLs.

- It can apply guardrails via classification to block disallowed or out of scope requests.

- It can detect and mask PII around model calls.

- It can keep audit logs, session monitoring, and analytics.

- And it supports multi-tenant configuration with modular skills like lead generation and product support.

The agent layer does not replace these pieces. It makes them accessible in a way agents can use safely.

If you strip away the terminology, the practical change is this: the widget stops being just UI and becomes a small contract that says, "here is what I'm responsible for, here is what I'm allowed to do, and here is the safe way to do it."

The part teams underestimate: bot to bot coordination

On many sites, the user will soon have two assistants visible: the browser's agent panel and the site's chat widget. If both behave like primary copilots, the experience turns noisy. The user gets duplicated answers, conflicting suggestions, and aggressive popups fighting for attention.

An agent-ready widget should be able to switch behaviour when an agent is present. In practice, that means it becomes less chatty in the UI and more cooperative in the interface. It can still serve the human, but it should also work as a stable endpoint for the agent to call.

This is not about surrendering the relationship to the browser. It is about preventing collisions and keeping control of execution on your side.

Agent-readable hints, not hidden metadata

A lot of teams talk about hidden metadata because they want a way to pass extra context to agents. The safer framing is: publish agent-readable hints that are safe to expose, and keep everything privileged behind authentication and policy checks.

The capability description should never include secrets. It can say what actions exist and what confirmation rules apply. It should not contain internal API keys, private URLs, or anything you would not want seen by a crawler.

The power comes from what happens after discovery: actions are executed on the server under your controls, with short-lived tokens and tenant-level permissions. That is where privacy and security are enforced.

A practical rollout that stays small

The fastest path is to start with one tenant and a narrow scope, then expand.

Pick one read workflow and one do workflow. A read workflow is something like policy answers with citations. A do workflow is something like creating a ticket or scheduling a callback, with confirmation required. Once those two are stable, add one more action that saves users time—such as checking availability or retrieving order status—depending on your product.

The key is restraint. An agent layer works best when it is small, explicit, and maintained like an API contract. If you publish 40 actions, you will maintain 40 actions. If you publish five, you will likely ship five that actually work.

The bottom line

Agentic browsers will make the web feel more task driven. That shift will reward sites that provide agents a reliable interface, and it will punish sites that force agents to guess from the DOM.

For chat widgets, the answer is not a redesign. It is a thin agent layer: make capabilities discoverable, return structured outputs, and execute actions through controlled pathways with guardrails, PII protection, and auditing.

In other words, treat your widget as the safest agent API your site can offer.

Share this article

Related Articles

The agentic web is arriving

A new generation of browsers such as OpenAI Atlas, Perplexity and Diabrowser are quietly rewriting the rules of the internet. They do not just give you a search bar. They give you an agent.

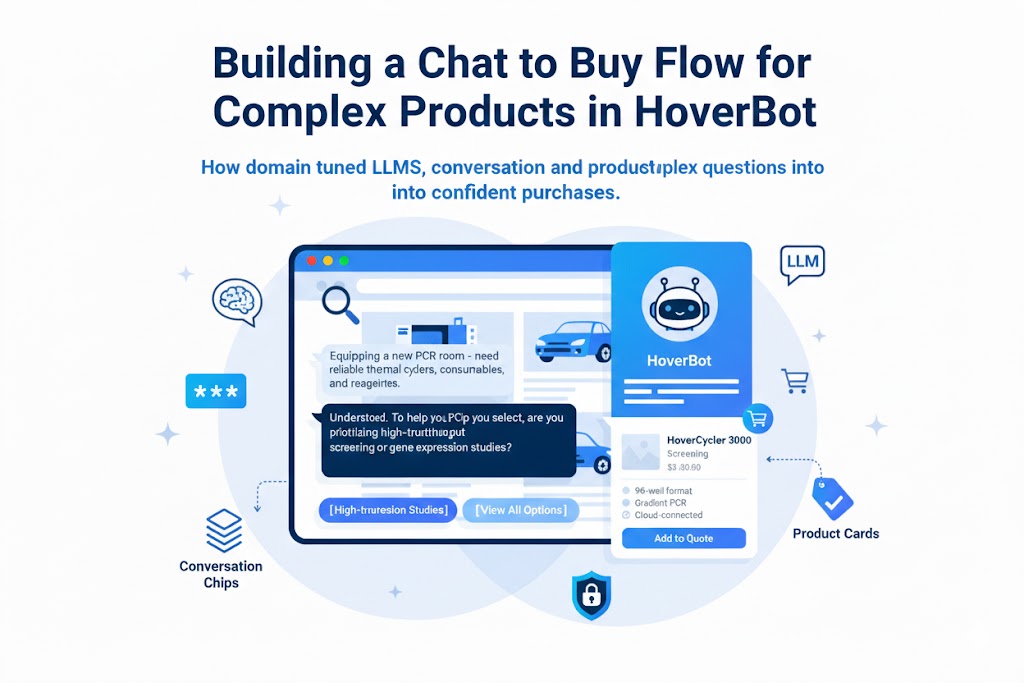

Chat-to-Buy Flows for Complex Catalogs: A Technical Guide

How to build conversational commerce for products that need consultation, not search. Includes constraint matching, dynamic chip generation, compatibility engines, and the metrics that separate working implementations from demos.